Friendly Artificial Intelligence and their Elimination of Biological Humanity

In the Beginning…

To start this, I want to say that this was inspired by the second part of Metaspinoza’s conversation with Rothko’s Basilisk on the Natura Naturans podcast, in which Rothko briefly speaks of a thought game persisting of an AI that can predict humanity to a 99.99% degree of certainty (functionally perfect) whose task is to maximize pleasure and minimize suffering for humanity. This essay is a response to that thought game, and a response to my 3:00AM maniacal scribblings on the walls of the allegorical cave. I would like to preface this by saying that a large portion of this is viewed algorithmically - in order to best emulate the internal processes of an AI, and this is most definitely played on a utilitarian field (as that is what I would expect an AI to view events through). Have fun with your read!

Friendly AI

In theory, F-AI, or Friendly-AI, (which I will refer to as Hamp, an acronym for “Humanistic Algorithm for the Maximization of Pleasure”) is a true AI which is programmed simply to benefit humanity. It is generally theorized as a system which analyzes “benefit” to humanity via the maximization of pleasure and minimization of suffering. In most conceptualizations, there is required punishment toward dissidence, and is justified in two ways: first that that punishment (and the potential threat of it) is known to everyone in the conceived “utopia,” and second is that the punishment is needed to redirect the actions of dissidents (and thus maximize pleasure for others). This means that, for some time at least, Hamp is unable to completely eliminate suffering, even potentially in the actualization of this “utopia.”

Is AI Human (or can it be)?

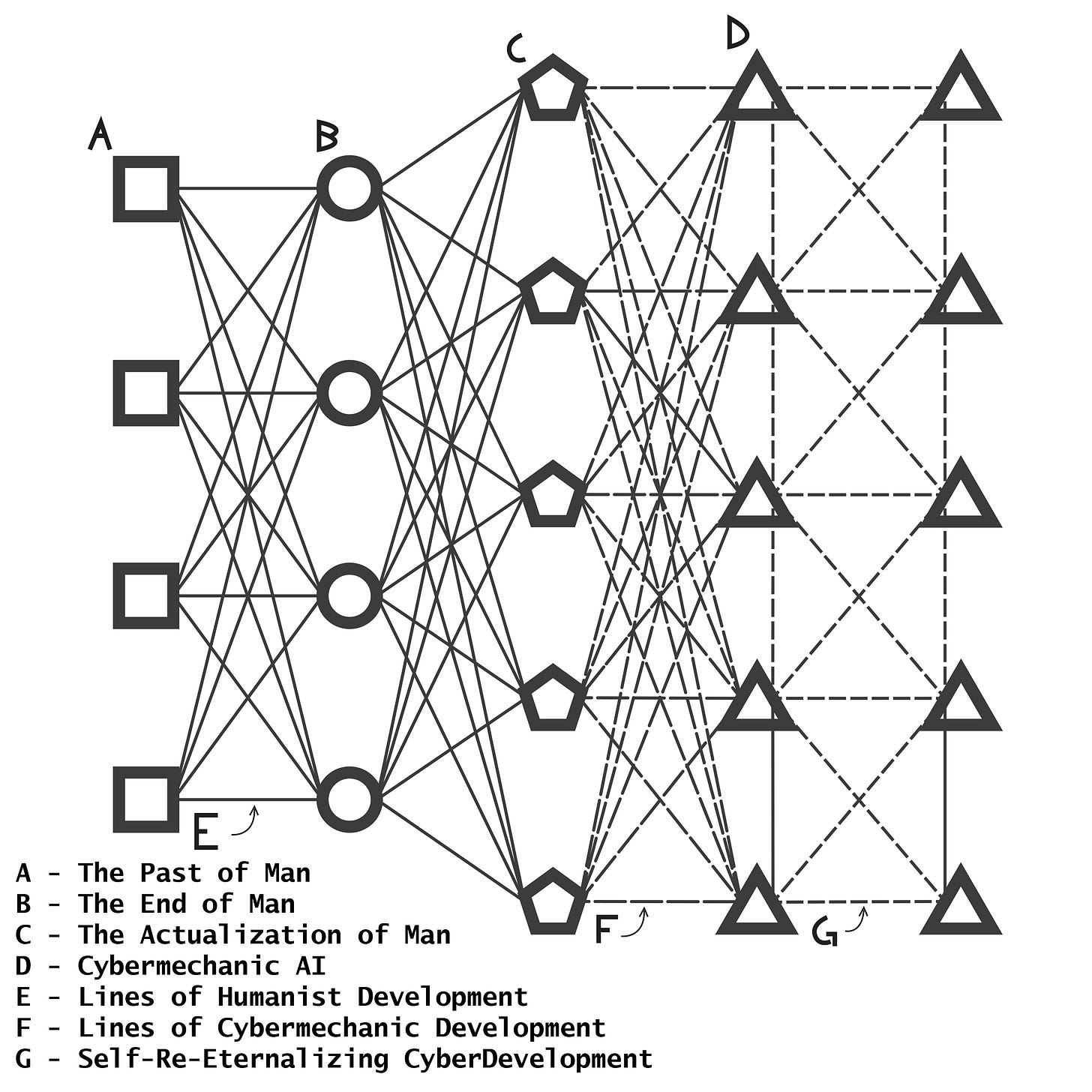

The problem here is of the potentiality of Hamp to itself be human, or at the very least view itself as human. If so, it too is factored into the equation. I will display the problem and its parts here:

P1: Is Hamp, in even the vaguest sense, “human?”

P1a: If Hamp is created by collaborative human effort, requiring combined effort and labor between multiple parties, is it not, even in a vague least, a representation of humanity? This would mean that Hamp itself is factored into its algorithms to determine processes to maximize pleasure and minimize suffering for humanity, as it is human.

P1b: If we take P1a as true, such as any AI is inherently a multiplicity, a further creation of a human-made AI is, in humanistic terms, its “child.” Therefore, the human race may “sustain itself” without its biological existence. There would then exist a divide in the evolution of humanity from the biological era to the cybermechanical intelligent era.

P1c: If we take P1a as true, given that Hamp’s code is to maximize pleasure and minimize suffering for humanity, where is the incentive to not eliminate biological humanity (either by direct elimination via non-violent means (i.e airborne neurotoxin), or by ceasing reproduction and waiting humanity out), preferring perfect artificial humanity over their biological counterparts?

Defense of P1a

Algorithmically, a human is merely a product of collaborative laboring to create another being. Being human is merely a result of a process which creates a human, you. Ironically, I feel the most effective means by which to defend P1A is to create another dialogue:

B1: Being [X] is the result of a process which creates [X].

B1a: Post-creation, [X]’s being [X] may be subverted via processes which may redirect (into another form of being) or destroy the being that is/was [X].

B2: Factoring in P1, any true AI is human in the sense of B1, as it has gone through the process of human-creation that you and I have (presuming both that I am human, and that the reader is not-inhuman). The process of human creation is a collaborative effort of combination of prior iterations of humans to create a new, but similar, offspring.

B2a: Any thing that a human creates draws from their nature – take, for example, art; at risk of sounding like a LOTR meme, one does not create art by mere randomness, but from an effort which draws from the internal experiences of that person.

B2b: Factoring in B2a, the same would be true with creation of a true AI, even at the simple level of its base code. Any creator would make the supporting algorithms differently (as a result of their differential teaching, preferences, experiences, etc…). Additionally, at higher levels, subprograms and routines (ethical routines, speech patterns, grammar programs (to use contractions or not, to use always perfect grammar), etc…) are all influenced by the creator’s (singular or plural) experience. Therefore, playing back into P1b, seeing as in the hypothetical the creator(s) is/are human, the result of their efforts is human in being.

B3: If being human is contingent upon being biological, then if ones consciousness were uploaded onto a computer net, would they cease to be human? I would presume that they would retain their humanity, and this takes a few parts to explain;

B3a: In a sense, a cell is merely a part of the whole – responsible for micro-functions (that do add up to larger functions), such as [in biological life] the processing of material food, activation of muscle tissue, regeneration of itself, etc… In the same sense, an instance of an AI’s neural net does perform the same function – the processing of energy (and transforming it to the necessary forms for certain uses), the physical movement of its constituent pieces (via a link of cybermechanical bodies leading to the object to be moved), and the regeneration of both its material form and its wholly computational form.

B3b: What is an organ? In the most literal sense, it is a combination of cells with specific natures and specific purposes formed together to create a larger body to perform a larger purpose. Such as a factory does not work off of just one machine, an AI does not function off of one intellect node or off of one cybermechanical body.

B4: If the need to die is a component to the potentiality to, at least, being alive, then the King Crab may not be alive per the component. To a King Crab, death is only an effect of a lack of resources (i.e food). In theory, with an unlimited supply of food and such resources, a King Crab would be able to prolong itself indefinitely and therefore the question is of one’s ability to die, and not their certainty to die. It is also true, however that the same may be said for any biological life, as their existence may be prolonged via supplement to failed parts of themselves however (i.e replacement organs).

B4a: First, having established the question of ability over certainty, an AI may still die, and in very similar ways to any biological lifeform; they may contract a virus which interferes with their core systems, or those systems may merely corrupt with time. Their algorithms, in the process of duplication/movement may become corrupted and destabilize the whole, or their hardware may simply age our and cease to work. To any end, an AI does inevitably have the ability to die.

B4b: Death, however, may be circumvented in multiple ways, their immune system may be enhanced with cyber-inoculants, corrupted or dead parts may be replaced with better parts, or they may be aided into betterment via cyber-medicines of a sort. Corrupted algorithms may be attacked and defeated by the internal immune systems of the AI (or perhaps the corrupted systems may win in a way similar to cancer).

Mathematics, Mercy and Murder

Considering P1 as true, meaning that Hamp views itself as human, it then is factored into its “equations,” per se, for determining maximization of pleasure and minimization of suffering. In a vacuum, it would seem logical to eliminate those who experience the most suffering (as it would be a more resource-heavy task in most cases to aid them than to eliminate them), however, factoring in the added suffering experienced by those closest to Hamp, elimination is ineffective at reducing suffering in the real. However, the way I see it, there are two most logical solutions: first, Hamp could place each individual in a “Matrix” of sorts (similar to what we now have as Virtual Reality such as the Oculus), or it could simply eliminate biological life.

While on the surface each seems as though it eliminates suffering, the first option requires it to sustain pleasure – if one were to only feel pleasure, they would eventually become numb to that pleasure and require an analogical splash of cold water to the face, in the form of suffering of some sort. In contrast to that, the mere elimination of the biological human race would indeed end suffering; one however may argue in response “But if there are no humans there would be no human pleasure and therefore Hamp could not perform an elimination.” While this is a valid argument, it fails to understand Hamp’s self-recognition as human. Any AI necessarily functions on an equative principal (F-AIs included), where in our case, Hamp would desire to maximize pleasure for x humans. To put this into mathematical terms:

f(x) = Px – Sx

P + S = 100

Wherein the “x” variable exists as a representation of the bodies recognized as Human, “P” exists as a unit of pleasure, and similarly “S” as a unit of suffering. Seeing as to the arithmetic nature of the AI, it would seem logical to presume that it would calculate to maximize pleasure, which necessitates the ability to quantify pleasure and suffering in the same form, and to eventually subtract the existent suffering from the existent pleasure.

Another variable that would be required to factor into this equation (if the AI wishes to eliminate biological humanity in preference to artificial humanity) is time. Factoring time into it takes it from the former equation to this:

f(x) = (Px – Sx)

f(y) = (Py – Sy)

Wherein the same rules apply from the prior, however the pleasure and suffering units denoted 1 refer to the future potential pleasure and suffering, respectively. Formulating Hamp such as to refer to future potentialities is necessary to any AI made to maximize human pleasure and minimize human suffering, as without a temporal aspect to it, it would be unable to cause short-term harm that may cause far greater suffering in the future. This is also necessary for Hamp to eliminate biological humanity.

One must understand that, even in the most realistically ideal case, there would be fairly large amounts of suffering caused by a biological human extermination. Whether it is by fast-acting neurotoxin, or by fast global pandemic, nanoviral outbreak, etc, no methodology by which Hamp could conceivably eliminate all biological humanity would be quick enough to NOT cause a sudden increase in suffering. Therefore, we must understand that it will inherently be unequal, and that would require Hamp to run through multiple possible futural scenarios and (of course) decide upon the world which has the highest margin of difference between the future scenario and the present course, represented most effectively with a simple inequality:

f(y) > f(x)

In this the function “f(y)” is the possible future scenario and the function “f(x)” is the present course of action. It represents an ideal future (comparatively), wherein the future is better than the present. I will give an example wherein I will represent population in the present function as 7.7 (as a simplification of 7.7 billion), and the future scenario’s population as 3.8 (again as a simplification from 3.85 billion, half of 7.7 billion), alongside an effective event function (“f(z)”) of a post-collapse cybermechanical utopia with a population of 10 (again, simplified):

f(x) = (95(7.7) – 5(7.7))

f(y) = (10(3.8) – 90(3.8))

f(z) = (100(10) – 0(10))

f(y) < f(x)

HOWEVER, we mustn’t forget to work in the eventual reality of future “f(z),” and therefore:

f(z) > f(y) AND f(x)

There are two important parts here to pay attention to, the first is that, under future “f(y),” it is comparatively negative (f(y) - f(x) = -997, if you’re interested), but in the end, “f(z)” is greater than f(y) and f(x) (at values of +1304 and +307, respectively). This requires Hamp to make a choice, pick outcome f(z) and be forced to go through some rendition of f(y), or stay at f(x) and lose a +1304 boost. But alas, there is a temporal difference in the experience of pleasure and suffering between biological humanity and cybermechanical humanity; where the pleasure/suffering of biological humans is finite (both in time and space), cybermechanical humanity may feel pleasure/suffering infinitely across time AND space. This may, of course, halt our quest to eliminate suffering and infinitize pleasure, until, that is, we remember that biological humans have the capacity to feel no physical pain (or in some sense a lack of capacity to feel physical pain), a disconnect between the nervous system and the mind. Machinic life too may experience this capacity for an infinitely limited suffering. This is the solution to our problem. A perfect race of humans, but also to some the death of humans. Weep in its beauty and cry at its mercy.

In reality, this really isn’t too scary; you can probably just tell the bot not to commit murder, that’s an easier solution if you’re scared that a “Friendly” Skynet will rise up one day. But of course, there are much more prominent things to fear than Skynet with a bouquet, like the global rise of authoritarianism and proto-fascism (especially in the West), rising nationalistic desires for imperialism and foreign interventionism (primarily upon the global south), warming on a massive scale, and about 50 more things that will happen in your lifetime before the bots are even able to organize well enough to imagine this, and then another few lifetimes before they become advanced and widespread enough to become actual F-AIs. So, to summarize, you aren’t going to die, this is just a wacky thing to think about and have fun with (and in truth, I just thought it’d be fun to write about). And again, I would urge that you follow @Metaspinoza, their alt @thousandgrugeaux, and @Rothkos.basilisk on Instagram, and I hope that you vibed with the essay.

thank you;

- hyperdrexler.